Testing demand is the fastest way to avoid building the wrong product. This guide walks founders through peer to peer marketplace platform MVP user validation with tactics you can use this week. It focuses on clear experiments, low cost recruitment, and metrics that predict growth. Many startups miss this step and waste months. Read on to learn a pragmatic path to early proof and less guesswork.

Why Early User Feedback Prevents Waste

Early user feedback gives you signals that reveal whether the marketplace can reach product market fit. Startups often assume supply and demand will solve themselves. That rarely happens. You need to see both sides engage with a simple offering. Run micro experiments that expose your core value exchange and measure reactions. Focus on qualitative insights and behavioral data. Record short interviews with users after they try the flow. Many founders think surveys are enough. They are not. Behavioral signals like repeat visits, conversion on a booking, and willingness to pay are far more telling. Use early feedback to prune features and sharpen your onboarding. This saves time and money, and it makes later engineering work focused and effective.

- Test both supply and demand at the same time

- Prioritize behavioral signals over opinions

- Use short interviews to explain why users act

- Avoid building features before testing value

- Measure repeat usage not just sign ups

Map The Core Value Exchange

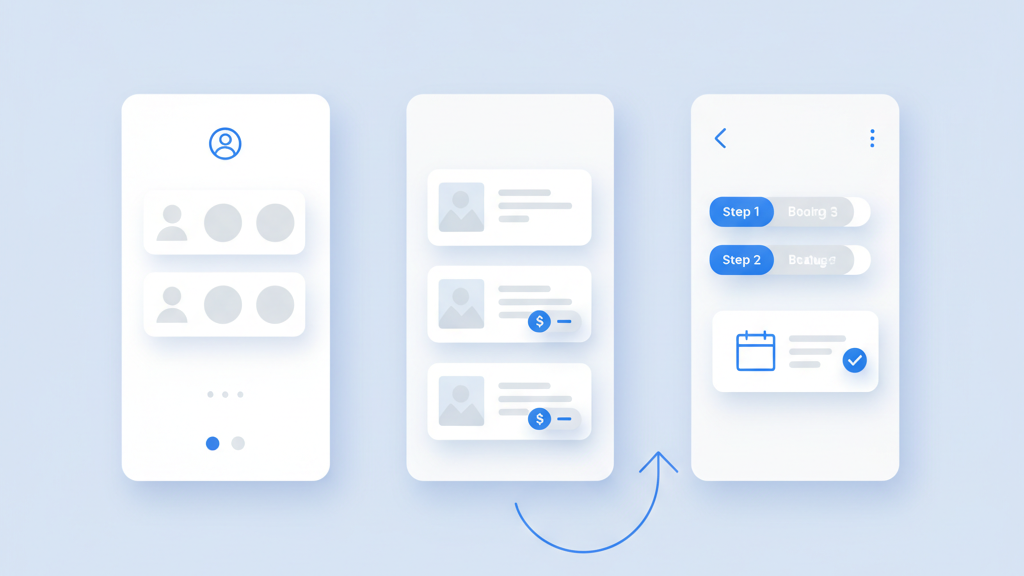

Define the exact exchange that makes your marketplace valuable. Write one sentence that states who benefits, what they get, and what they pay with. This becomes your north star for experiments. A clear value exchange guides which features you must test and which can wait. Create crude wireframes that show only the steps needed to complete that exchange. Many teams overbuild search filters and profiles before they know if people will transact. Keep things minimal and observable. The goal is to see a completed transaction or a clear stop point. Use this map to design funnels and user flows that you can instrument. You will learn more from a single completed booking than from dozens of unfinished profiles.

- Write one sentence for the core exchange

- Sketch minimal flows that enable a transaction

- Delay vanity features until demand is proven

- Instrument every step to capture drop offs

- Use the map to prioritize tests

Recruit Testers Without Building Everything

You can validate demand before code is complete by recruiting users into simple manual processes. Use landing pages and targeted ads to capture interested buyers and suppliers. Offer incentives to first movers and promise early access. Then fulfill requests manually or via email while you observe the flow. This approach gives you real conversations and a chance to refine price, messaging, and trust signals. Many founders prefer to wait for a polished product. That is a costly mistake. Manual fulfillment teaches you the real work required to make transactions happen. Track conversion rates from sign up to first transaction and note friction points. These numbers are the foundation of any optimistic forecast for growth and engineering investment.

- Use landing pages to capture early interest

- Fulfill manually to learn operational work

- Offer clear incentives for early users

- Track conversion from visit to transaction

- Interview first users about friction points

Design Lightweight Flows That Reveal Intent

Design flows that force a decision so you can observe intent. Replace long profiles with simple listings that highlight the outcome. Use clear microcopy that asks for a commitment like booking a time or placing a hold. Keep the number of clicks low and track each click as a signal. This reduces noise and produces clean data. Many teams add optional steps that dilute intent. That makes it hard to tell if users want the core outcome. Run A B tests with one flow that requires a commitment and another that collects interest only. Compare the behavioral lift. The flow that reduces hesitation often reveals real market demand faster than additional features or polish.

- Strip profiles to the essentials

- Ask for a clear commitment from users

- Track each click as a signal

- Run simple A B tests on flow friction

- Avoid optional steps that dilute intent

Choose Metrics That Predict Growth

Pick metrics that are predictive not descriptive. Vanity metrics make you feel busy but they do not predict survival. Focus on conversion to first transaction, time to complete a match, and repeat transaction rate. These metrics show whether the marketplace mechanics work at the smallest scale. Track supply responsiveness, average earnings per supplier, and cancellations. Those numbers reveal if your pricing, trust, and logistics hold up. Many founders ignore unit economics early. That is risky. Small scale unit economics hint at whether you can scale profitably. Use a dashboard that updates daily and review it with the team. Quick visibility lets you decide when to invest in growth and where to tighten the experience.

- Measure conversion to first transaction

- Track repeat transactions and cancellations

- Monitor supply responsiveness

- Review small scale unit economics

- Use daily dashboards for quick insight

Iterate Based On Real User Signals

Use the data you collect to run short iteration cycles. Prioritize fixes that increase completed transactions and reduce time to match. Run experiments for one to two weeks and treat them as learning sprints. Keep hypotheses small and measurable. When an experiment shows improvement, scale the change slowly to confirm the result. When it fails, document why and move on. Many teams get stuck optimizing the wrong thing because they lack a clear hypothesis. Opinions are cheap. Signals are not. Keep a public log of experiments so the team can see what was tested and why. This builds better judgment and prevents repeating mistakes. Iterate until the marketplace shows repeatable demand and positive unit economics.

- Run short measurable experiments

- Prioritize improvements that increase transactions

- Scale changes slowly after validation

- Document failures and learnings

- Keep an experiment log for team alignment