Many founders need fast answers before they raise money or build full scale products. FlutterFlow fintech app MVP validation service for founders helps teams move from idea to usable prototype without months of backend work. This article walks through a pragmatic validation plan that mixes no code UI, lightweight APIs, and early user testing. You will get a checklist for what to build first, how to test regulatory and payment flows, and how to measure real demand. Many startups miss early testing of edge cases like compliance and fraud detection, so treat this as a practical playbook and not a marketing pitch.

Define The Core Testable Hypothesis

Start by stating the single user problem you want to validate and a measurable outcome. Pick one primary metric that proves demand. For fintech that might be funded transaction volume, number of onboarded users who pass KYC checks, or conversion from onboarding to first payment. Avoid vague goals like platform adoption. Write a one sentence hypothesis that links an action to an outcome and a time window. This keeps the MVP focused and reduces scope creep. A clear hypothesis also helps you decide which parts of the product need real integration and which can be faked. Many founders miss this step and build too much before they know whether users will pay or comply with rules.

- Choose one measurable metric

- Keep scope small and focused

- Decide what must be real and what can be mocked

- Set a time window for validation

Map The Minimal User Journey

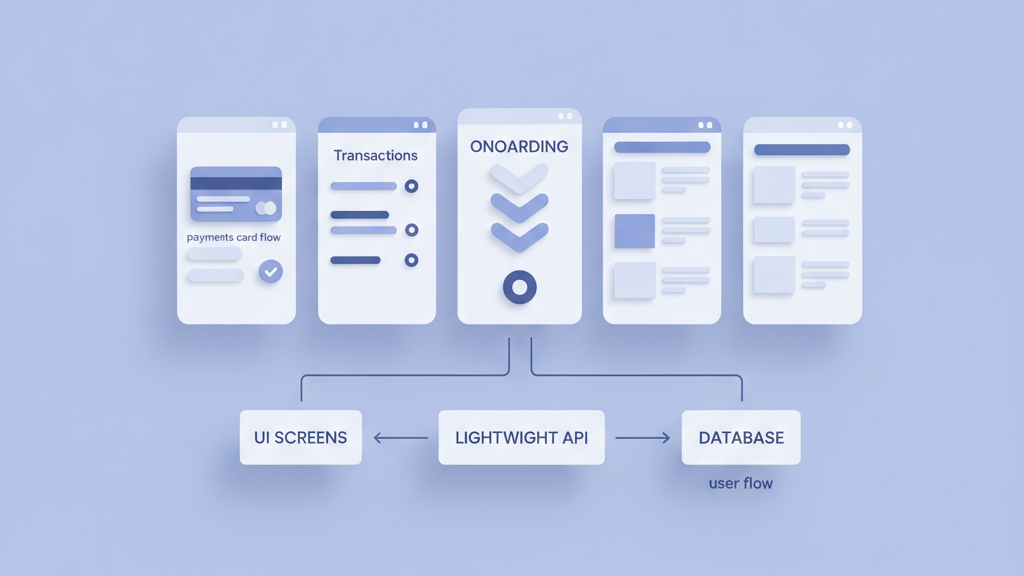

Lay out the steps a user must take to prove the hypothesis. For a fintech app this normally includes discoverability, signup, identity verification, funding, and the first transaction. Draw a linear flow and mark risk points where a user could drop off. Prioritize screens that directly impact your primary metric. Use FlutterFlow to build those screens quickly and link them to a simple backend. Skip advanced settings and personalization in the first pass. The goal is to get a working loop that users can complete under realistic conditions. A short journey reduces testing noise and helps isolate which part of the product needs iteration.

- Outline each step from discovery to first transaction

- Mark drop off risks and prioritize fixes

- Build only screens that affect the primary metric

- Test the full loop end to end

Choose What To Fake And What To Build

Decide which backend pieces require production grade integration and which can be simulated for the test. Simulate bank flows, ledger consistency, or regulatory responses when those are not central to the hypothesis. Implement real payment rails only when funding or settlement is the validated outcome. Use feature toggles or mock APIs so you can swap mocks for real services without rebuilding the UI. This saves time and money while keeping the test realistic enough to drive user behavior. A common mistake is to over invest in perfect integrations before you know whether the flow is valuable to users.

- Mock non critical integrations

- Use real services for outcome critical flows

- Add feature toggles for quick swaps

- Document assumptions behind each mock

Design For Measurement And Compliance

Plan the analytics and compliance traps before you launch the MVP. Track user steps, drop offs, and conversion to the target metric. Log metadata that helps you debug why users fail identity checks or payments. Store consent timestamps and records for regulatory needs. Keep the data model minimal but sufficient for audits. Instrument key events in FlutterFlow and route them to analytics and logging endpoints. This makes it easier to iterate after each test. Ignoring measurement or compliance can ruin insights and create legal headaches. Many founders treat compliance as a later problem and then face costly rewrites.

- Instrument key events and conversions

- Log consent and KYC outcomes

- Keep data models audit friendly

- Use simple dashboards for fast analysis

Recruit The Right Early Users

Find users who represent your early adopters and not the general market. Use targeted channels like niche forums, professional groups, or existing networks that match the use case. Offer a small incentive that does not bias the user behavior you want to measure. Prepare a short script or walkthrough so testers focus on the core value. Collect qualitative feedback alongside metrics to understand motivations and pain points. The goal is to learn quickly and iterate. Avoid broad sampling that dilutes signals and leads to false conclusions about product market fit.

- Target users who match your early adopter profile

- Use incentives that do not bias behavior

- Collect qualitative feedback on motivations

- Limit the test group to manageable size

Iterate Fast With FlutterFlow Workflows

Use the rapid UI iteration capabilities in FlutterFlow to try alternative designs and flows. Swap layouts, tweak copy, and change validation rules without heavy engineering cycles. Pair those UI changes with backend mock updates and retest the same cohort where possible. Track how each variant affects your primary metric. Keep iterations small and hypothesis driven. This approach accelerates learning and reduces wasted build time. In my experience, teams that iterate in small batches learn more than teams that launch large feature sets at once.

- Make small, hypothesis driven changes

- Retest with the same user cohort when possible

- Measure impact on the primary metric

- Keep UI and backend changes isolated

Decide When To Scale Or Pivot

After several rapid cycles you will reach a decision point. If the target metric shows strong, repeatable signals you can plan production grade integrations and compliance work. If results are mixed look for small pivots that change the value proposition or the target customer. Use pre defined thresholds from your hypothesis to avoid wishful thinking. Be pragmatic about the cost of production integrations versus the expected upside. Many founders hold on to partial signals and waste time. A clear decision framework helps you move from validation to product strategy with confidence.

- Set clear thresholds for go no go decisions

- Weigh integration costs against upside

- Consider small pivots before full rebuilds

- Document learnings for future roadmaps