If you want to test personalization fast then an AI powered recommendation software MVP for ecommerce is the right experiment. This guide outlines the priorities you should set, the trade offs to accept, and the metrics to measure. Many startups miss this and waste time on features that do not move the needle.

Define Success And Scope

Start by defining what success looks like for your MVP. Pick one or two metrics that matter such as revenue per visitor, click through rate, or retention. Focus on a single use case like homepage recommendations or cart upsells and keep the launch surface small. Map the user flow and note the minimal data you need to rank products and measure results. Plan a short experiment window and a traffic slice so you can reach a conclusion quickly. An effective MVP is about clear trade offs and fast learning not model perfection. Many startups miss this and try to build a full platform before validating the core hypothesis. Keep the scope tight and set a decision deadline.

- Pick one primary metric

- Limit to a single use case

- Map minimal data needs

- Define experiment length

Collect The Right Data

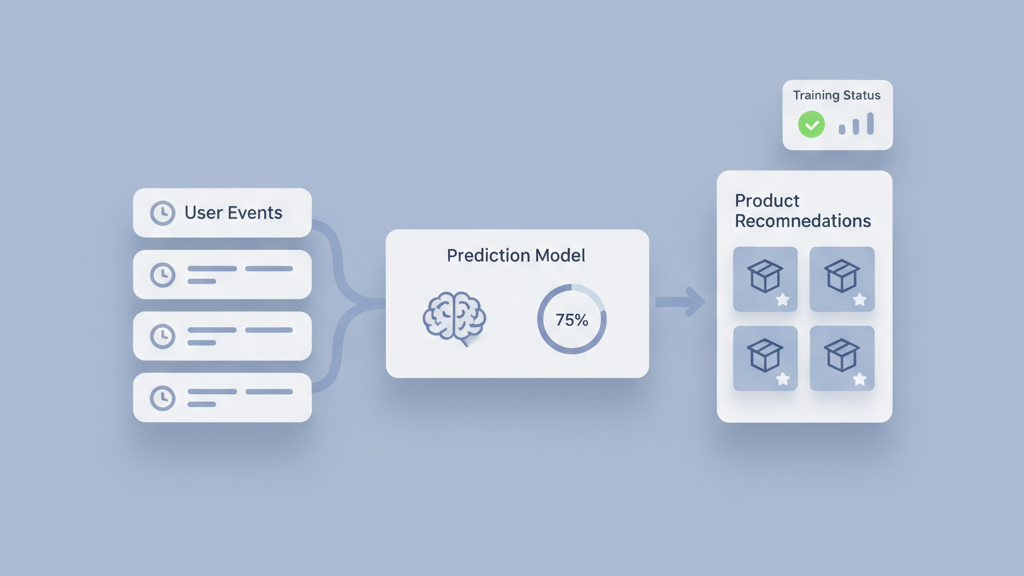

Data quality beats quantity for an early recommender. Start with user events that are easiest to capture like page views, add to cart, and purchases. Instrument those events with consistent item identifiers and timestamps. Enrich records with simple product attributes such as category and price. Build a lightweight pipeline that batches events and lets you replay them for offline evaluation. Validate your data quickly with basic sanity checks and simple charts. A small clean dataset lets you iterate faster than a large messy one. Many teams over engineer tracing and miss daily checks. Plan a way to handle cold start items and anonymous users early so the product does not feel broken.

- Track core events only

- Use stable item ids

- Add basic product attributes

- Implement simple validation

Choose Simple Models And Fallbacks

For an MVP pick models that are interpretable and quick to train. Start with popularity baselines and simple collaborative filters. Add lightweight content based scoring when item features are available. Use heuristics for business rules like promoted items or inventory checks. Always include a fallback when the model lacks confidence. A deterministic fallback keeps the experience coherent for new users and low traffic segments. Keep evaluation practices straightforward with offline metrics and a plan for online A B tests. Avoid complex deep learning unless you have a clear data advantage and time to iterate. Practicality wins at the MVP stage.

- Start with popularity baselines

- Add simple collaborative filters

- Use content scoring where helpful

- Define clear fallbacks

Engineer For Iteration Not Perfection

Build an infrastructure that supports fast changes. Use modular components for data ingestion, feature generation, model training, and serving. Keep the model interface stable so you can swap algorithms without rewriting UI code. Automate tests for data contracts and add monitoring for latency and errors. Opt for hosted services when they reduce time to market but avoid vendor lock in that prevents learning. Log decisions and ties to experiments so you can analyze impact later. A small codebase that you can change in a day is better than a perfect architecture you cannot ship. Many founders underestimate maintenance costs and end up with brittle deployments.

- Modularize pipeline components

- Keep model interfaces stable

- Automate data contract tests

- Log decisions for analysis

Design UX For Trust And Control

User interface matters from day one. Design recommendation placements that feel natural and useful. Provide subtle explanations or affordances to build trust in recommendations. Allow easy controls for users to dismiss or refine suggestions. Measure engagement metrics that match your success criteria and track negative signals like bounce or quick dismissals. Test copy and layout in small A B tests to find what drives interaction. Avoid intrusive placements that inflate short term clicks but harm long term retention. A modest trustworthy experience often converts better than aggressive personalization that users find creepy.

- Place recommendations naturally

- Add light controls for users

- Measure engagement and negative signals

- Run small UI A B tests

Respect Privacy And Compliance

Plan privacy from the start to avoid rework later. Keep personally identifiable data out of quick experiments when possible. Use aggregated signals and session level identifiers for early models. Prepare simple consent flows that tie to data collection choices. Document data retention and deletion procedures so you can explain them to customers and auditors. Track regulatory considerations such as CCPA and federal guidance for handling user data in the US. Many startups treat compliance as an afterthought and face costly changes later. Investing a little effort now prevents costly rewrites and loss of trust.

- Minimize use of personal data

- Implement clear consent flows

- Document retention rules

- Plan for regional regulations

Run Experiments And Measure Impact

Set up controlled experiments to validate your assumptions. Use A B tests or feature flags so you can compare the MVP against current experience. Choose guardrails for statistical power and avoid endless tinkering without a plan. Monitor both business metrics and user experience signals so you can detect negative side effects. Keep experiments short but statistically meaningful and avoid making decisions on small noisy samples. Capture qualitative feedback to explain surprising results. When an experiment shows value, expand coverage gradually and keep monitoring for regression. Many teams celebrate early wins without confirming repeatability and then lose progress during scale.

- Use feature flags for rollout

- Define power and sample size

- Track business and UX signals

- Collect qualitative feedback

Scale When The Signal Is Clear

Scale your system once experiments reliably beat the control and metrics are stable across cohorts. At that point invest in automation around training, deployment, and monitoring. Improve model robustness, add richer features, and tune latency. Revisit privacy and governance controls as volume grows. Plan for operational costs and instrument costs by experiment so you can estimate ROI. Avoid scaling simply because the prototype is cute. Make sure the business case holds under real traffic and diverse user segments. A measured scale up keeps you nimble and avoids building large technical debt that slows future product work.

- Scale after repeatable wins

- Automate training and deployment

- Monitor costs and ROI

- Avoid premature scaling