Choosing between Serverless vs containers for startup product scaling is a common crossroads for founders and product managers. The choice affects cost, time to market, and the team skills you need. This guide breaks down real trade offs with plain language and practical warnings. Many startups miss this and pick the option that sounds modern rather than the option that fits their product. I will compare operational overhead, performance patterns, and pricing behavior. You will get quick checks you can run against your roadmap, and a simple decision framework to move from prototype to scale. The goal is to help you pick a path that preserves developer velocity while avoiding surprise bills and operational debt. This is not a one size fits all answer, but it will make your decision less emotional and more data driven.

When To Start With Serverless

Serverless shines when you need speed and low upfront operations work. If your product is in early customer discovery and traffic is unpredictable, serverless lets you ship features without managing servers. Managed services handle scaling, patching, and many failure modes for you. Startups get fast iterations and lower initial costs when they use pay per request models and managed databases. Many founders like this approach because it simplifies deployments and reduces DevOps time. But serverless can hide cost traps once traffic grows and patterns stabilize. It also forces you to design for short lived processes which can change how you build state and transactions. Think of serverless as a rapid experiment platform that you can continue with if your access patterns stay bursty and event driven.

When Containers Make Sense

Containers are the safer choice when you need control over runtime and resource allocation. If your product depends on heavy CPU work, custom binaries, or requires consistent performance, containers offer predictable environments. They also suit teams that plan a steady traffic trajectory and want to avoid per request pricing surprises. Containers give you portability between clouds and on prem options which helps reduce vendor lock in. But containers come with ops costs, such as cluster management, upgrades, and networking setup. You will likely need CI CD pipelines and observability from day one. For many startups the right pattern is to use containers once you have product market fit and predictable load. That lets you optimize costs and performance without sacrificing developer productivity during the early phase.

Cost Trade Offs

Cost is the factor that will surprise many teams. Serverless billing is simple at first but can grow quickly with high invocation rates and egress. Containers require provisioning which creates fixed costs even at low utilization. That fixed cost can be more efficient when load is steady and predictable. You should run simple cost models based on realistic traffic curves from your roadmap rather than optimistic guesses. Include database, network egress, and monitoring costs in your estimates. Also consider developer time as a cost component. Managing clusters takes time and expertise which is expensive for small teams. Many startups underestimate the effort to optimize costs on either platform. Track cost per active user and cost per request to compare real world numbers as you scale and iterate.

Performance And Cold Starts

Performance needs change with user expectations and product complexity. Serverless functions can suffer from cold starts which add latency on the first request or after idle periods. This has improved with modern platforms but it is still a consideration for latency sensitive features. Containers tend to provide more consistent response times because the runtime stays warm. You can mitigate cold starts by keeping functions warm, but that adds cost. For batch jobs and background processing the difference rarely matters. For real time features and customer facing APIs you should run load tests that mirror expected user flows. Measure tail latency and not just median numbers. This exposes the real experience for users and helps you decide if the convenience of serverless is worth the latency trade offs.

Operational Complexity And Team Skills

Team skills often determine the right approach more than raw technology. Serverless lowers the bar for ops but raises design complexity around state and integration. Containers require knowledge of orchestrators, networking, and resource tuning. If your team has limited DevOps experience you may prefer serverless to avoid early operational debt. Conversely if you have backend engineers familiar with Linux and Docker, containers let you standardize on a reproducible runtime. Tooling like managed Kubernetes reduces some burden but does not remove the need for monitoring and incident handling. Many founders underestimate the time to build reliable pipelines and observability. Make hiring plans that match the chosen path and be honest about the skills you can acquire or outsource.

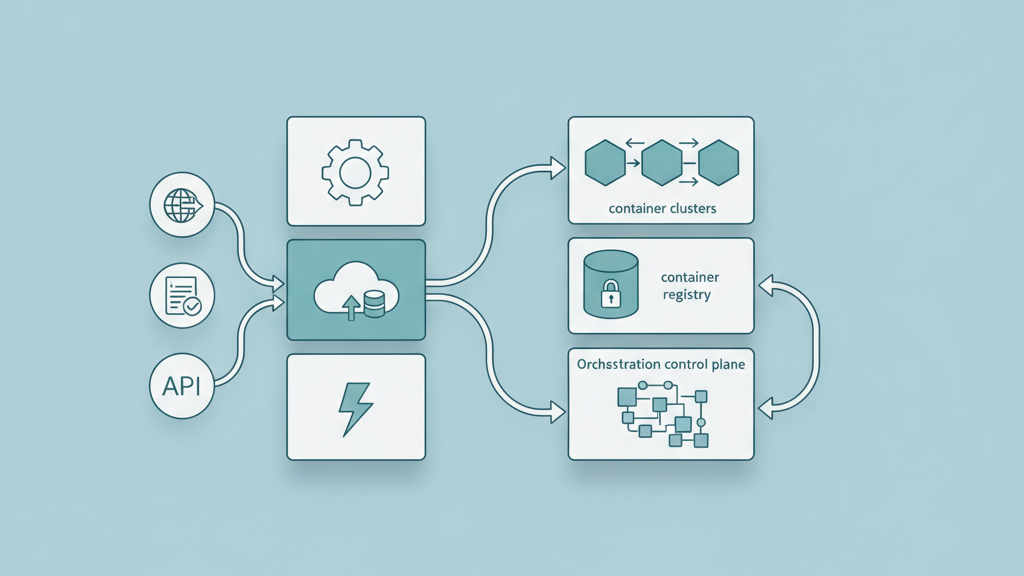

Scaling Patterns And Architecture

Scaling is not only about adding more instances. You should design for graceful degradation and proportional scaling from the start. Event driven architectures work well with serverless and reduce coupling between services. Containers favor horizontal scaling and allow stateful sidecars when needed. For database scaling consider read replicas, caching layers, and partitioning strategies. Use queues to absorb traffic spikes and to decouple synchronous paths from heavy background processing. Also think about operational scaling like deployment cadence and rollback strategies. Many startups forget to automate rollbacks and gradually rollouts which increases risk as traffic grows. Choose patterns that match your expected growth trajectory and instrument everything to detect pressure points early.

A Practical Decision Framework

Make the choice with a short experiment driven plan that limits regret. Start by listing your main workloads and classifying them by duration, CPU needs, and statefulness. If most workloads are short lived and event driven use serverless to move fast. If you have steady compute or custom runtimes prefer containers. Run a cost and performance pilot for a month and gather real metrics. Set thresholds for when you will move services between platforms. For example move to containers when monthly invocations exceed a cost per CPU threshold, or when latency breaches your SLA. Many startups forget to set these thresholds and linger in the wrong model. Finally, keep your architecture modular so you can migrate pieces without a full rewrite when traffic patterns change.