This guide walks startup founders and product managers through a repeatable process for setting feature release milestones and goals that match business outcomes. I cover planning steps, common traps, and ways to measure success. Many startups miss this alignment and ship features that do not move metrics.

Start With Business Outcomes

Begin by stating the business outcomes your product needs to deliver. Avoid vague goals and focus on measurable changes in user behavior or revenue. Translate strategy into a few clear outcomes, rank them by impact, and use them to decide which features matter. Many teams pick features because they are visible not because they move a metric. Define a hypothesis for each outcome and note how a feature would test that idea. This makes the release planning process more scientific and gives product managers a defensible way to prioritize. When outcomes are explicit the team moves faster and stakeholders trust trade offs more. Keep the list short and revisit it each quarter.

- State measurable business outcomes

- Rank outcomes by impact

- Write a hypothesis per outcome

- Avoid feature bias

- Review outcomes each quarter

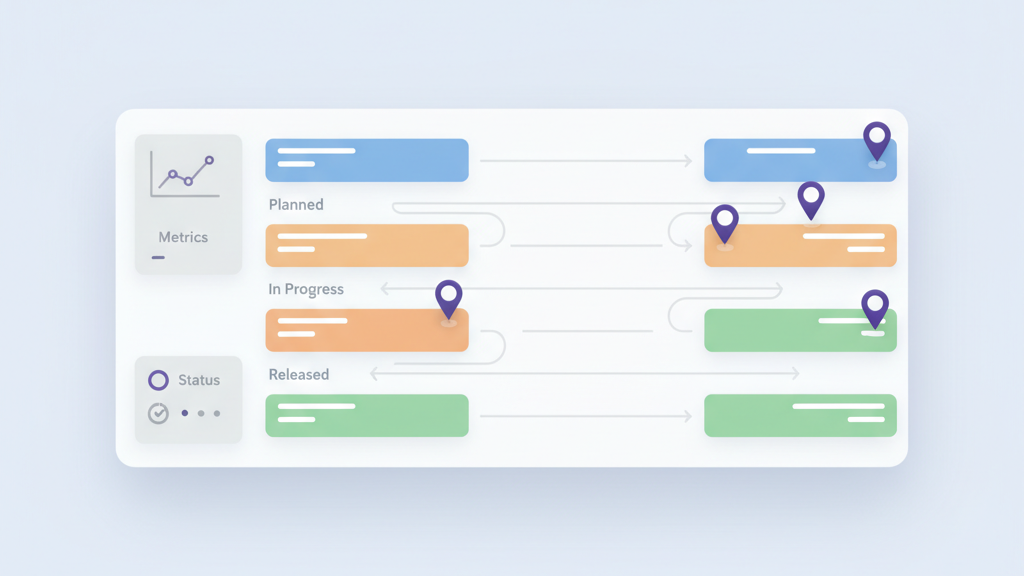

Break Outcomes Into Milestones

Turn outcomes into time bound milestones that reflect progress not perfection. A milestone should show a meaningful step toward the outcome such as a prototype, a user test batch, or an initial release with tracking. Choose milestones that can be validated within a sprint or two so you get feedback quickly. Milestones also help with communication because they set clear expectations for stakeholders. Many founders expect one big launch and then are surprised by slow learning loops. Use milestone gates to decide if you proceed, pivot, or stop. This reduces sunk cost and keeps the team focused on validated learning rather than feature completion alone.

- Define time bound milestones

- Make milestones measurable

- Use quick validation steps

- Set go no go gates

- Focus on learning not perfection

Map Features To Goals

Connect each planned feature to a specific outcome and a milestone. Avoid broad maps that say a feature supports many goals without clarity. Instead pick the primary metric the feature is meant to move and the user action it targets. This makes success criteria clear during planning and review. If a feature cannot show how it moves a metric, challenge its priority. Mapping also surfaces dependencies and helps the team sequence work so that early releases unlock later ones. In practice this mapping forces trade offs and highlights experiments that are cheap to run. It is a modest step that pays off because it aligns engineering effort with the company direction.

- Assign one primary metric per feature

- Describe the target user action

- Call out dependencies early

- Sequence for learning

- Drop features with unclear impact

Estimate And Timebox Work

Use rough estimates to size features and group work into time boxed slices that produce usable outcomes. Timeboxes force prioritization and limit scope creep. Ask teams to provide a minimal viable slice that can be delivered in a single milestone. This may mean shipping a partial UI or API with instrumentation only. Many startups underestimate engineering work and then miss milestones. Keep estimates loose but hold to timeboxes and use demo days to check progress. If a slice does not deliver the expected signal, cut the next slice and reassess. This way you preserve momentum and protect roadmap health while still learning quickly from real user data.

- Create rough effort estimates

- Define minimal viable slices

- Enforce short timeboxes

- Demo progress each milestone

- Adjust scope after feedback

Instrument For Measurement

Measurement is non negotiable for release milestones to mean anything. Add instrumentation to track the target metric before the release so you have a reliable baseline. Decide which events and funnels you need to capture and test the analytics early in the development cycle. Many teams ship without proper tracking and then debate results instead of learning. Make measurement part of the definition of done and automate dashboards that show progress toward milestones. If you use experiments, plan the A B strategy and required sample sizes up front. Good instrumentation shortens decision time and reduces opinion driven debates after launch.

- Track baseline metrics first

- Instrument key events and funnels

- Make tracking part of done

- Automate milestone dashboards

- Plan experiments early

Align Stakeholders With Simple Signals

Keep stakeholder communication focused on a few simple signals that indicate progress. Use milestones, key metrics, and demo outcomes as the main narrative. Avoid long status reports that list tasks rather than results. Regularly show the evidence that a milestone moved or failed to move a metric and explain next steps. Many founders appreciate blunt updates more than optimism dressed as detail. Use short alignment rituals like a milestone review meeting and a single page roadmap that highlights risks. Clear signals reduce misaligned expectations and make prioritization faster.

- Share a few key signals

- Use milestone review meetings

- Prefer evidence over optimism

- Keep one page roadmap

- Highlight risks early

Plan For Risks And Dependencies

Identify technical and business risks when you set milestones and assign mitigations to early slices. Call out external dependencies such as partner APIs or platform releases and build contingency plans. This is where a lot of projects stall because teams do not plan for third party delays. Create parallel paths that allow progress if a dependency slips. For risky features, budget time for spikes that reduce uncertainty before counting a milestone as achievable. Review risk status at each milestone gate and decide if you continue, pivot, or shelve the work. This discipline saves time and keeps the roadmap realistic.

- List technical and business risks

- Track external dependencies

- Run early spikes for unknowns

- Build contingency paths

- Review risk at each gate

Iterate Based On Outcomes Not Completion

Use milestone results to guide the next planning cycle. If a milestone shows the expected metric movement, scale the work and plan follow on milestones. If not, treat the outcome as learning and change direction. Avoid equating feature completion with success. Teams that focus on delivery often miss the chance to iterate on product market fit. Keep release cycles short and use the data from measurement to refine hypotheses. Celebrate small wins and document failures so the team can apply lessons. This adaptive process keeps the roadmap flexible and increases the odds of shipping features that matter to users and the business.

- Decide next steps by outcome

- Scale work only when validated

- Treat failures as learning

- Keep cycles short

- Document lessons learned